Google Summer of Code project with CERN on Deep Learning Compression

25 Jun 2016

I have been working with the software team at CERN for the past month as part of my Google Summer of Code (GSoC) project. The project deals with adding GPU support for compressed Deep Learning Networks to TMVA (Toolkit for Multivariate Data Analysis), which is a part of the popular ROOT data analysis software framework developed by CERN. I’m mentored by Dr Sergei Gleyzer and Dr Lorenzo Moneta on this project.

TMVA already has a very stable framework for Deep Neural Networks (DNNs). But, in its current form it cannot be used by researchers who work in areas that require analysis of very large amounts of data. Hence, there is need for GPUs, which provide the unique advantage of extensive parallelism in a shared memory model, that means no communication penalties that come with distributed parallelism. But, this also comes at a price as even the best of GPUs can only support a few Gigabytes of memory. This poses a huge challenge, since transferring data from the CPU to the GPU frequently has huge communication costs associated with it. A work around this is compressing the DNN itself so a much larger network can be trained on the same GPU over large scale data.

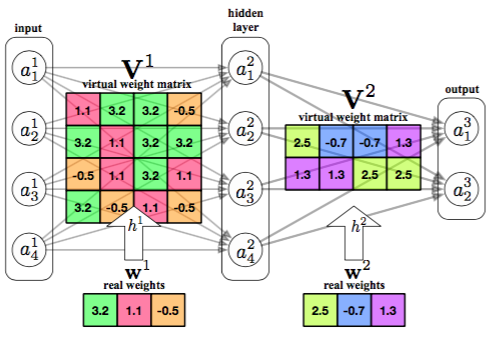

To compress this DNN such that it fits in the limited memory of a GPU, I am using an innovative hashing trick for compressing DNNs developed by Chen et al., recently presented at ICML 2015. Their technique of HashedNets, at its core, just exploits the redundancy in Deep Neural Networks to achieve drastic reductions in model sizes. Basically, the idea is to group the weights of the DNN into hashed buckets, where the parameters in one bucket share the same value. The authors also compare their technique to other memory management techniques like Dark Knowledge and their model consistently outperforms the others.

I’m using the xxHash open-source library for hashing, as it is extremely fast and runs at close to RAM speed limits.

I’ll be using this blog space to update my progress on this project and would really appreciate any constructive criticism :)